Instagram To Roll Out Feature That Will Allow Users Shadowban Their Bullies

Instagram’s next big fix for online bullying is coming in the form of artificial intelligence-flagged comments and the ability for users to restrict accounts from publicly commenting on their posts.

The team is launching a test soon that’ll give users the power to essentially “shadow ban” a user from their account, meaning the account holder can “restrict” another user, which makes their comments visible only to themselves. It also hides when the account holder is active on Instagram or when they’ve read a direct message.

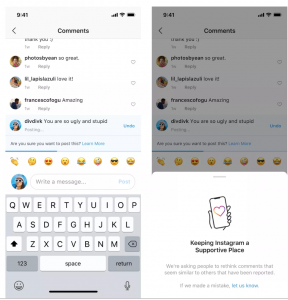

The company also separately announced today that it’s rolling out a new feature that’ll leverage AI to flag potentially offensive comments and ask the commenter if they really want to follow through with posting. They’ll be given the opportunity to undo their comment, and Instagram says that during tests, it encouraged “some” people to reflect and undo what they wrote. Clearly, that “some” stat isn’t concrete, and presumably, people posting offensive content know that they’re doing so, but maybe they’ll take a second to reconsider what they’re saying.

Instagram has already tested multiple bully-focused features, including an offensive comment filter that automatically screens bullying comments that “contain attacks on a person’s appearance or character, as well as threats to a person’s well-being or health” as well as a similar feature for photos and captions. The features are much needed, but they might not actually do much to prevent teens from harassing each other. In a report in The Atlantic last year, reporter Taylor Lorenz wrote that teens often create entire hate accounts dedicated to bullying specific people more than they bully on a main feed. Users can report these accounts, but that could require time to see any action taken. These new features, at least, are immediate and more helpful, if that’s where the bullying is happening.